TDLR; Minikube recent version might not be able to read old profiles. In this post we will see how to fix a Minikube invalid profile, at least how I did it in my case.

minikube profile list, invalid profile

Last Saturday, I had the privilege to speak at GIB Melbourne online, where I presented about Self-hosted Azure API Management Gateway. In the presentation, I needed to demonstrate using Minikube, and I spent couple of days preparing my cluster and making sure everything is good and ready.

One day before the presentation, Minikube suggested to me to upgrade to the latest version, and I thought: “what is the worst thing that can happen”, but then then responsible part of my brain begged me not to fall into this trap, and I stopped. Thank god I did!

After the presentation I decided to upgrade, so I upgraded to version 1.8.1 (I can’t remember the version I had before) but then none of my clusters worked!

When I try to list them using the command “minikube profile list” I find it listed under the invalid profiles

Oh this is not good! Was this update a breaking change that hinders my clusters unusable? Or is it that the new Minikube version doesn’t understand the old Profile configuration? And is the only way I am supposed to solve the problem is by deleting my clusters?! I am not happy.

Can I fix the configs?

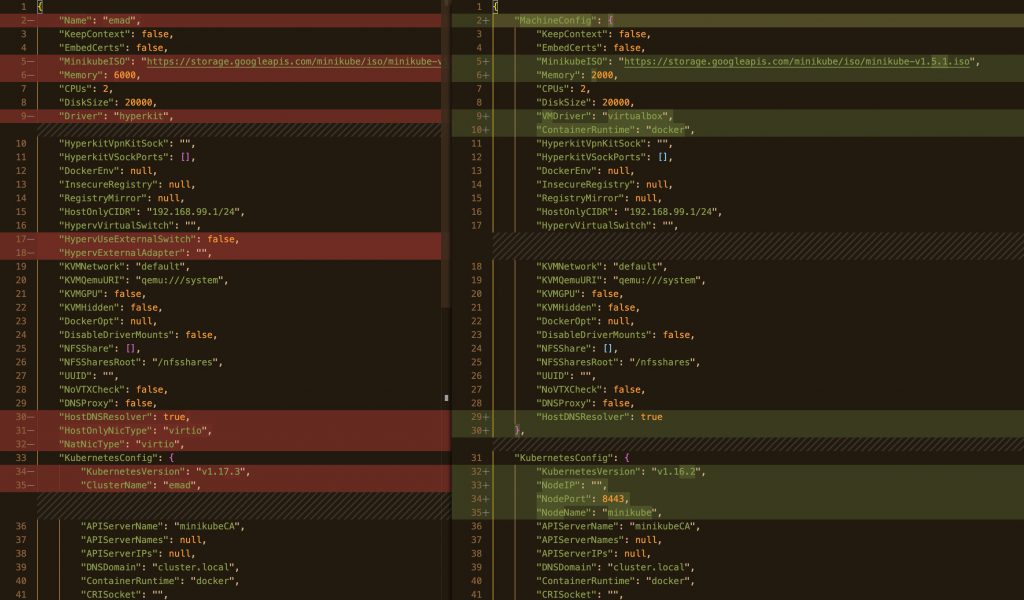

Before I worry about breaking changes, let me check what a valid profile looks like in the new update, so I created a new cluster and compared the two profiles. You can find a cluster’s profile in .minikube/profiles/[ProfileName]/config.json.

The following are the differences that I have noticed:

- There is no “MachineConfig” node in the configuration, and that most of its properties are taken one level higher in the JSON path.

- The “VMDriver” changed to “Driver”.

- The “ContainerRuntime” property is removed.

- There are about 4 properties introduced

- HyperUseExternalSwitch

- HypervExternalAdapter

- HostOnlyNicType

- NatNicType

- The “Nodes” collection is added, where each JSON node represents a Kubernetes cluster node. Each node has the following properties:

- Name

- IP

- Port

- KubernetesVersion

- ControlPlane

- Worker

- In the KubernetesConfig, the Node properties are moved to the newly created collection “Nodes” mentioned above:

- “NodeIP” moved to “IP”

- “NodePort” moved to “Port”

- NodeName moved to Name

- A new property ClusterName is added

The Solution

So what I did is that I changed the old profile format to match the new format, and set the new and different properties to the values that made most sense just like above. All was straight forward except for the Node IP address; It’s missing!

Digging a little deeper I found the IP address value (and other properties) in the machine configuration “.minikube/machines/[clustername]/config.json”. I copied these values from there and then ran my cluster to be resurrected from the dead!

I would have loved if Minikube itself took care of fixing the configs rather than suggesting to delete the profiles. Or maybe that can be a Pull Request :).

I hope this helps.

90ukzf

8jhgpe

650heb

3cm3gk

bhou7h

e75xqb

nsbil6

mptayl

i0lyl8

50xm2k

igxoa5

eko0tt

ta7t02

ibler6

hnajmn

4ilm8k